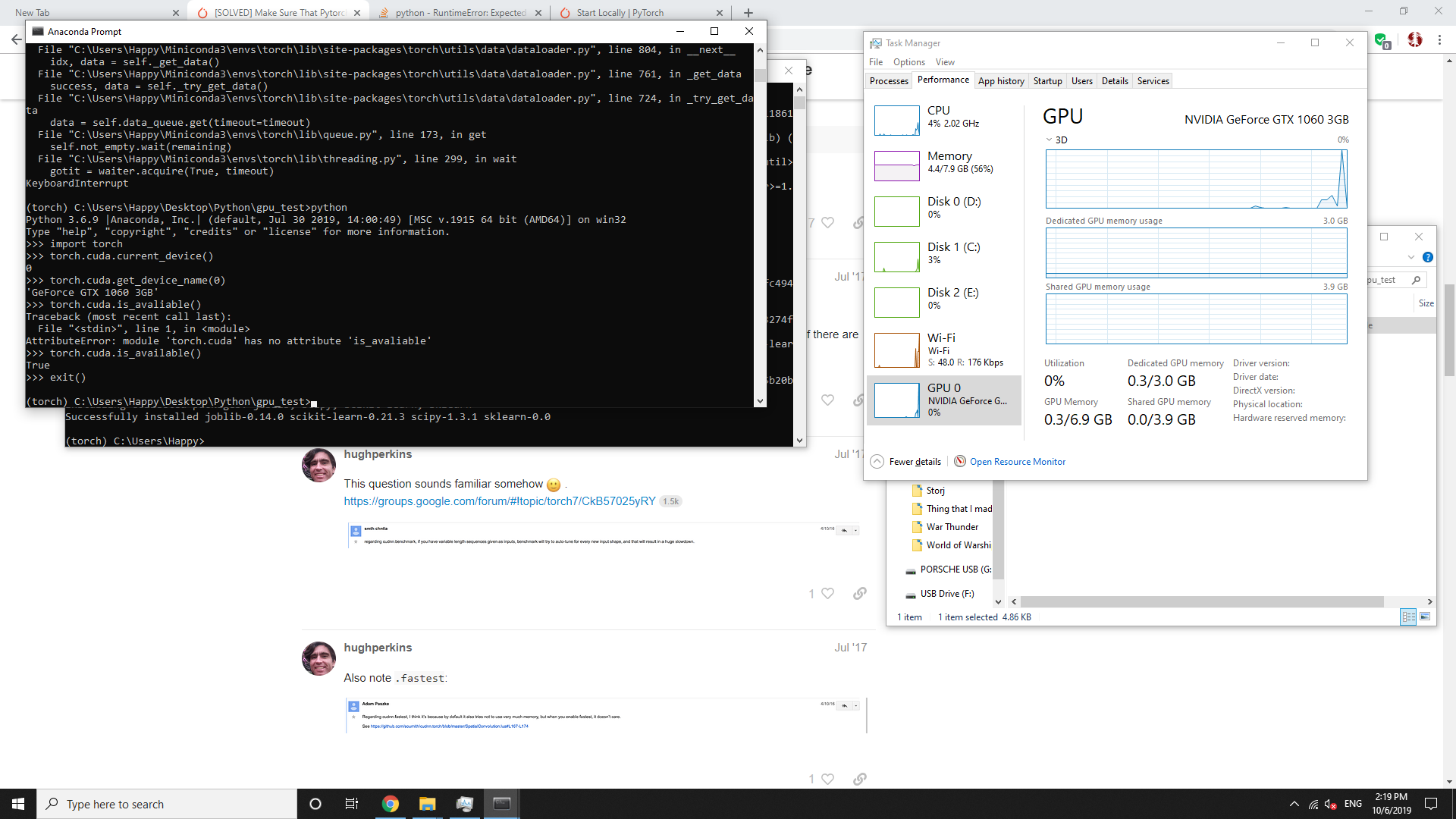

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

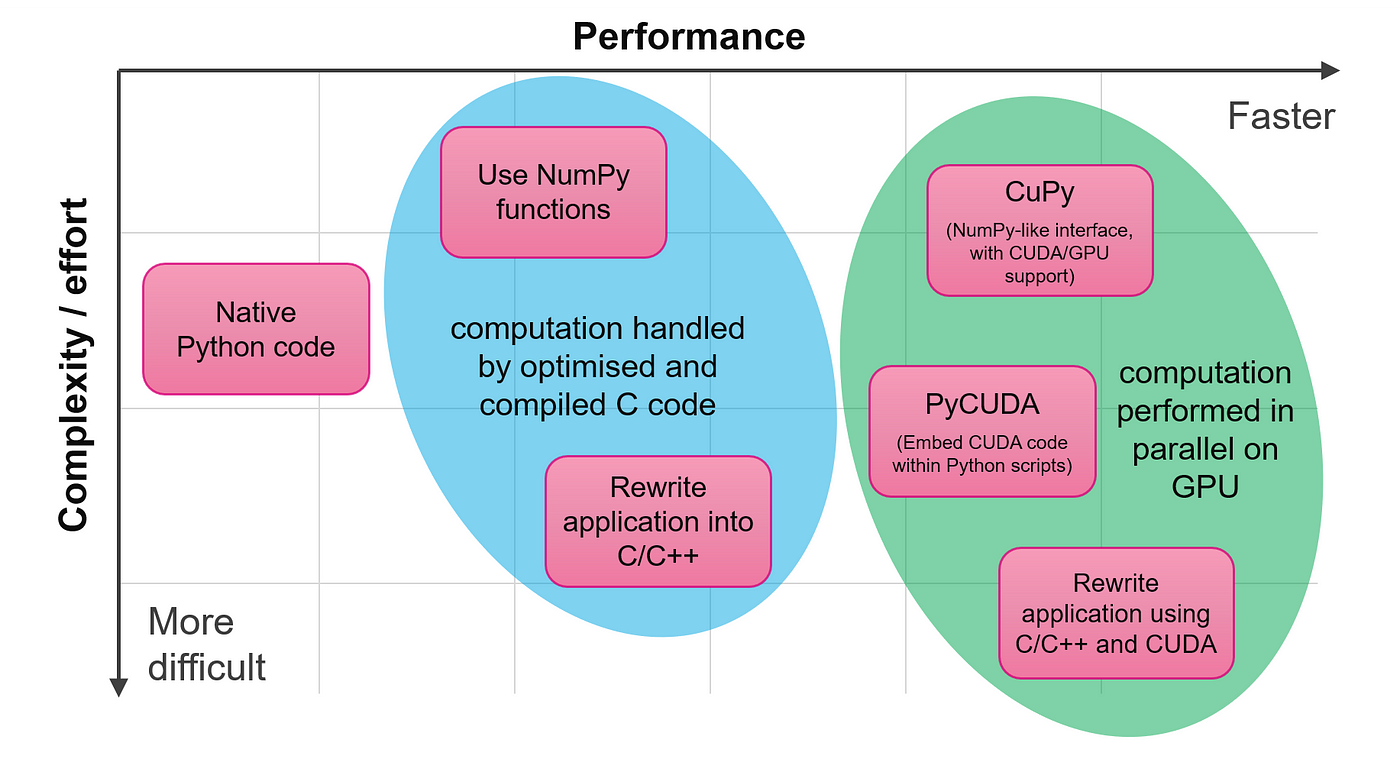

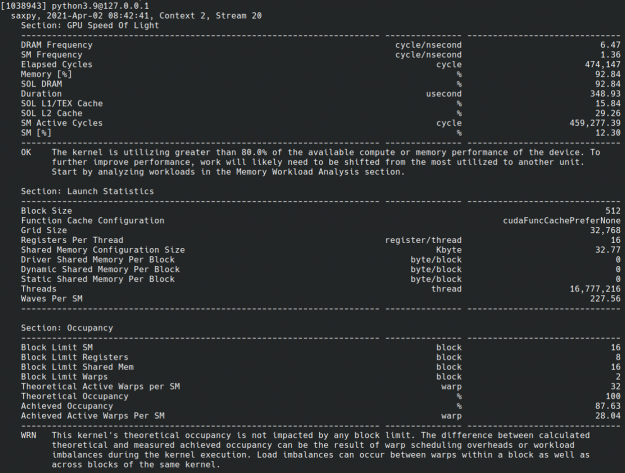

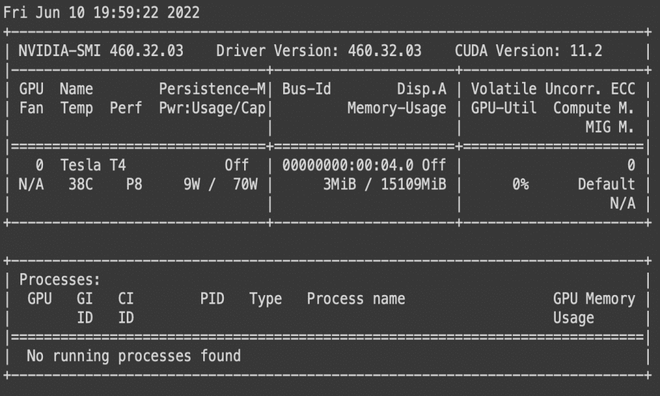

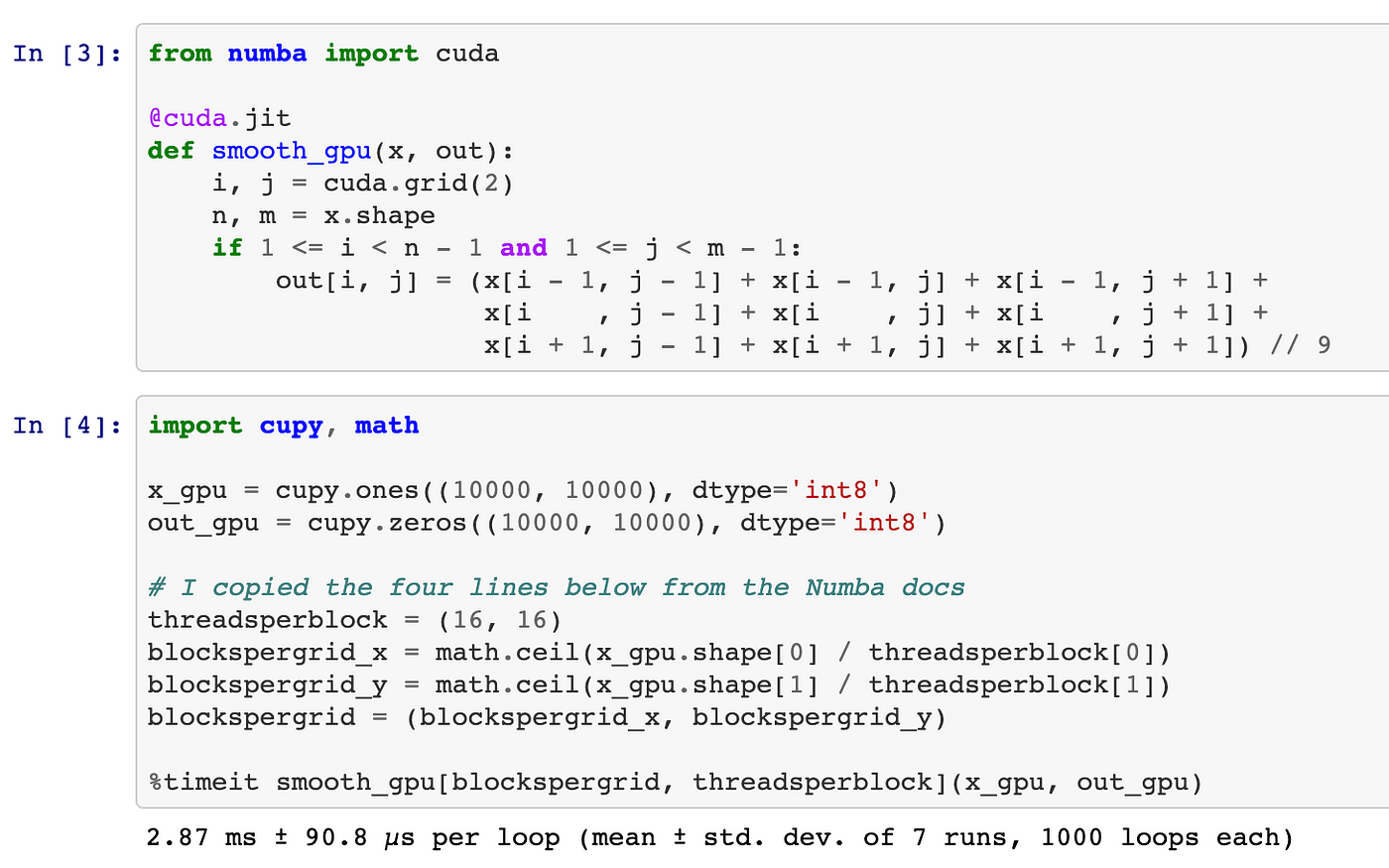

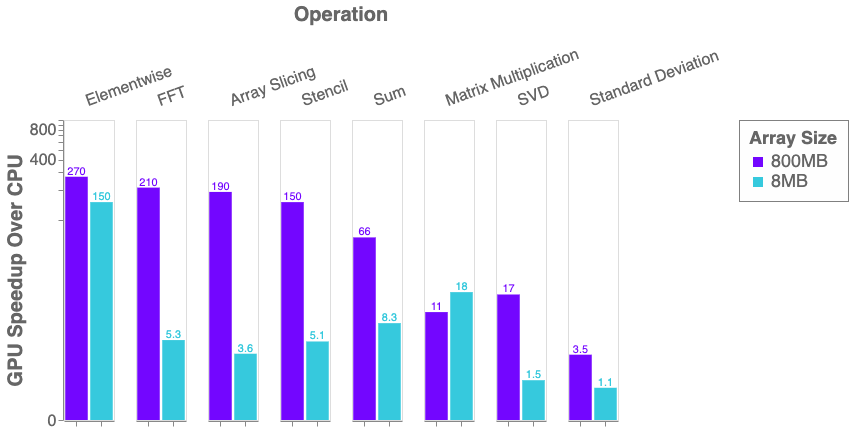

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

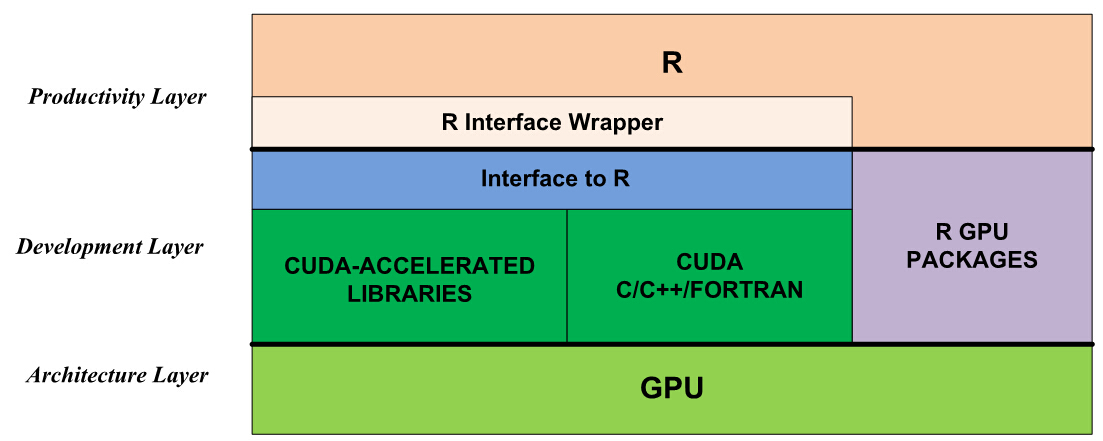

Computation | Free Full-Text | GPU Computing with Python: Performance, Energy Efficiency and Usability

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers